- #How to install spark on mac 64 Bit#

- #How to install spark on mac update#

- #How to install spark on mac full#

- #How to install spark on mac code#

Unless java is setup and validated successfully do not go further.

#How to install spark on mac code#

#How to install spark on mac 64 Bit#

#How to install spark on mac full#

Here is a full example of a standalone application to test PySpark locallyĬount = sc.parallelize(range(0, NUM_SAMPLES)) \

Sc = pyspark.SparkContext(appName="myAppName") To install findspark just type: $ pip3 install findsparkĪnd then on your IDE (I use Eclipse and Pydev) to initialize PySpark, just call: You can address this by adding PySpark to sys.path at runtime. Sometimes you need a full IDE to create more complex code, and PySpark isn’t on sys.path by default, but that doesn’t mean it can’t be used as a regular library. The result: Running PySpark in your favorite IDE To check if your notebook is initialized with SparkContext, you could try the following codes in your notebook:ĭots = sc.parallelize().cache() The PySpark context can be sc = SparkContext.getOrCreate() Create a new notebook by clicking on ‘New’ > ‘Notebooks Python ’. This command should start a Jupyter Notebook in your web browser. Restart (our just source) your terminal and launch PySpark: $ pyspark Your ~/.bash_profile file may look like this: Just add these lines to your ~/.bash_profile file: export PYSPARK_DRIVER_PYTHON=jupyterĮxport PYSPARK_DRIVER_PYTHON_OPTS='notebook'

#How to install spark on mac update#

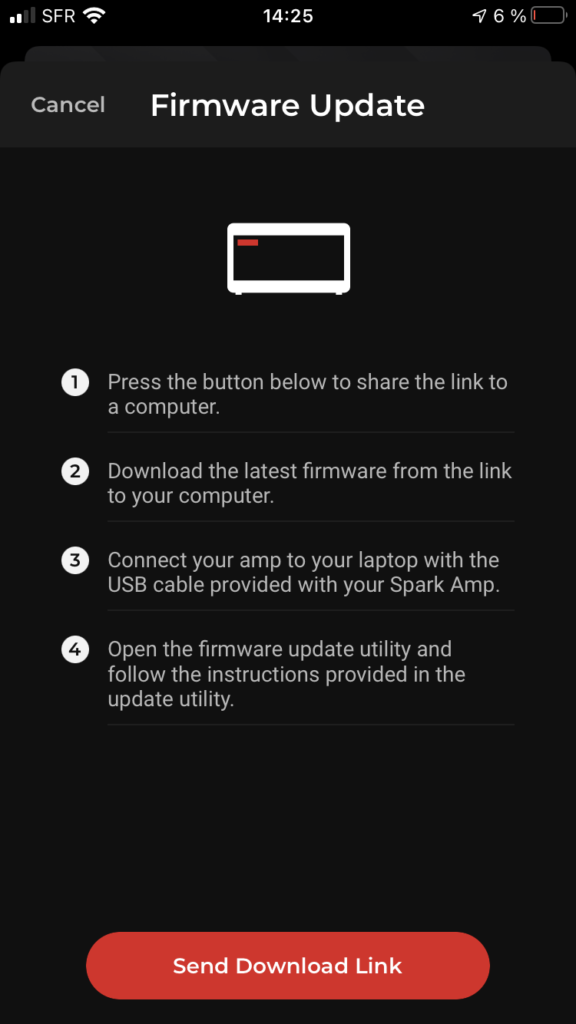

Now to run PySpark in Jupyter you’ll need to update the PySpark driver environment variables. # For python 3, You have to add the line below or you will get an error To do so, edit your bash file: $ nano ~/.bash_profileĬonfigure your $PATH variables by adding the following lines to your ~/.bash_profile file: export SPARK_HOME=/opt/spark To find what shell you are using, type: $ echo $SHELL Lrwxr-xr-x 1 root wheel 16 Dec 26 15:08 /opt/spark̀ -> /opt/spark-2.4.0įinally, tell your bash where to find Spark. The contents of a symbolic link are the address of the actual file or folder that is being linked to.Ĭreate a symbolic link (this will let you have multiple spark versions): $ sudo ln -s /opt/spark-2.4.0 /opt/spark̀Ĭheck that the link was indeed created $ ls -l /opt/spark̀ $ sudo mv spark-2.4.0-bin-hadoop2.7 /opt/spark-2.4.0Ī symbolic link is like a shortcut from one file to another. Unzip it and move it to your /opt folder: $ tar -xzf spark-2.4.0-bin-hadoop2.7.tgz Select the latest Spark release, a prebuilt package for Hadoop, and download it directly. Make sure you have Java 8 or higher installed on your computer and visit the Spark download page Install Jupyter notebook $ pip3 install jupyter Install PySpark

0 kommentar(er)

0 kommentar(er)